- #IN CODA2 PULL EXTERNAL SITE TO INPECT ELEMENT AND CODE HOW TO#

- #IN CODA2 PULL EXTERNAL SITE TO INPECT ELEMENT AND CODE CODE#

- #IN CODA2 PULL EXTERNAL SITE TO INPECT ELEMENT AND CODE WINDOWS#

For this, you can go to a story on Medium and execute this code in the browser console.

Let’s execute our console.save() in the browser to save the data in a file. Putting together all the pieces of the code, this is what we have: Here is the quick demo of console.save with a small array passed as data. Blobs represent data that isn't necessarily in a JavaScript-native format.Ĭreate blob is attached to a link tag on which a click event is triggered. A Blob object represents a file-like object of immutable, raw data. The task for this function is to dump a csv / json file with the data passed. We have defined a function name, console.save. This collection will be passed to one of the main functions. Defining our Senior helper function - the beastĪs we are crawling the page for different elements, we will save them in a collection. You can add a few more elements like extracting links from the story, all images, or embed links. These are the basic things which I want to show for this story. Lines 6 to 12 define the DOM element attributes which can be used to extract story title, clap count, user name, profile image URL, profile description and read time of the story, respectively. I’ll assume that you have opened a Medium story as of now in your browser. Get the console object instance from the browser // Console API to clear console before logging new data console.API if (typeof console._commandLineAPI != 'undefined') 2. For now, let’s crawl a story and save the scraped data in a file from the console automatically after scrapping.īut before we do that here’s a quick demo of the final execution. Medium does not refresh the page for some scenarios. If it does not, your console code will be gone. Otherwise, it does not reload the page if you want to crawl more than one page. The thing to keep in mind is that you need to make sure the website works similarly to a single page application. This JavaScript crawls all the links (takes 1–2 hours, as it does pagination also) and dumps a json file with all the crawled data. High Level Overviewįor crawling all the links on a page, I wrote a small piece of JS in the console. You can use this on any website without much setup, as it’s just JavaScript. That’s where I learned this cool stuff with the browser Console API. I had to quickly come up with an approach to first crawl all the links and pass those for details crawling of each page. Also, unfortunately, data was huge on the site. Setup for the content on the site was bit uncanny so I couldn’t start directly with selenium and node.

#IN CODA2 PULL EXTERNAL SITE TO INPECT ELEMENT AND CODE HOW TO#

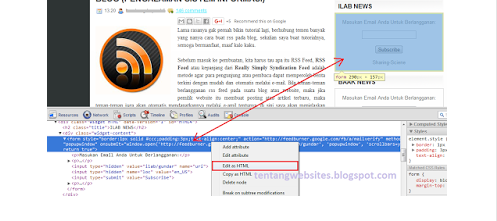

Right click on the part of the web page for which you want to see the source code, then click "Inspect".Īlternatively, to open the inspector without going to a particular part, press Ctrl + Shift + I.By Praveen Dubey How to use the browser console to scrape and save data in a file with JavaScript Photo by Lee from UnsplashĪ while back I had to crawl a site for links, and further use those page links to crawl data using selenium or puppeteer. You right click and choose the one that starts with "Inspect".

#IN CODA2 PULL EXTERNAL SITE TO INPECT ELEMENT AND CODE WINDOWS#

How to open Inspect Element in Windows Browsers (Chrome, Firefox, IE): The process for all the browsers is the same in Windows. If you're only looking at the backend, or in the style.css file, you might miss an important piece of code that completely changes how the user will see that part of the page. The best part is it allows you to see what's going on in the final render of the web page. It's something I use probably more than any other tool. It allows you to quickly jump to the important part of the code to see what's going on there. One of the most useful tools for a web developer is the Inspect Element tool.

0 kommentar(er)

0 kommentar(er)